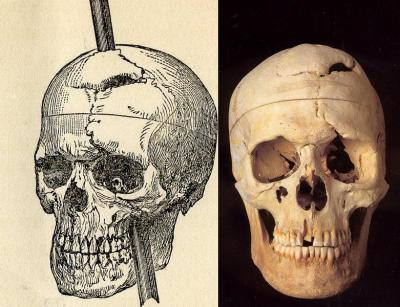

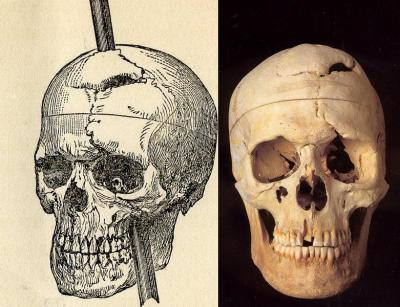

On September 13, 1848, a 25-year-old foreman named Phineas P. Gage was working on a railroad with his work team. In an unfortunate turn of events, as he was using a tamping iron (large iron rod with a pointed end, measuring 3 feet 7 inches in length and 1.25 inches in diameter) to pack gunpowder into a hole, the powder detonated. The forceful explosion drove the metal pole skyward through Gage’s left cheek, ripped into his brain and exited through his skull, landing dozens of metres away. His workmates rushed to Gage’s assistance (who they presumed to be dead at the time of the accident), and to their surprise, found that he was still alive.

In fact, Phineas Gage spoke within a few minutes of the incident, walked without assistance and returned to his lodging in town without much difficulty – albeit with two gaping holes in his head, oozing blood and brain everywhere. He was immediately seen by a physician who remarked at his survival. In fact, it is reported that he was well enough to say: “Here is business enough for you” to the doctor. Another physician named Dr John Harlow took over the case, tended to the wound, fixed up the hole and recorded that he had no immediate neurological, cognitive or life-threatening symptoms.

By November, he was stable and strong enough to return to his home, along with the rod that nearly killed him. His family and friends welcomed him back and did not notice anything other than the scar left by the rod and the fact that his left eye was closed. But this was when things started to get interesting.

Over the following few months, Gage’s friends found him “no longer Gage”, stating that he was behaving very differently to the man who he was before the accident. Dr Harlow wrote that the balance between his “intellectual faculties and animal propensities” had seemingly been destroyed. Gage became more aggressive, inattentive, unable to keep a job, verbally abusive and sexually disinhibited. He would frequently swear using the most offensive profanities and would be as sexually suggestive as a March hare. How did the iron rod cause such a dramatic change in Gage’s personality?

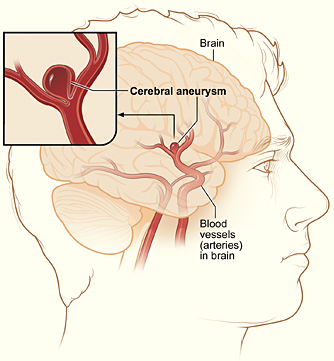

Phineas Gage would go on to be one of the most famous patient case histories in the history of modern medicine. His case was the first to suggest some sort of link between the brain and personalities. Neurologists noted that the trauma and subsequent infection destroyed much of Gage’s left frontal lobe – the part of the brain that we now attribute to a person’s logical thinking, personality and executive functions. It is in essence the “seat of the mind”. Ergo, Gage’s loss of one of his frontal lobes meant that his control of bodily functions, movement and other important brain functions like memory were undisturbed, while his “higher thinking” was essentially destroyed (he was essentially lobotomised). This explains Dr Harlow’s observation of his “animal propensities”.

Thanks to this case, a great discussion was sparked and the idea that different parts of the brain govern different aspects of the mind was conceived. We are now able to localise almost exactly where the language area is, what part controls movement and how a certain piece of the brain converts short-term memory into long-term memory.